In collaboration with Intel, CAN is pleased to announce the launch of Intel Perceptual Computing challenge! This is your opportunity to change the way people interface with computers and win a chance at $100,000 and get your app on new Perceptual Computing devices.

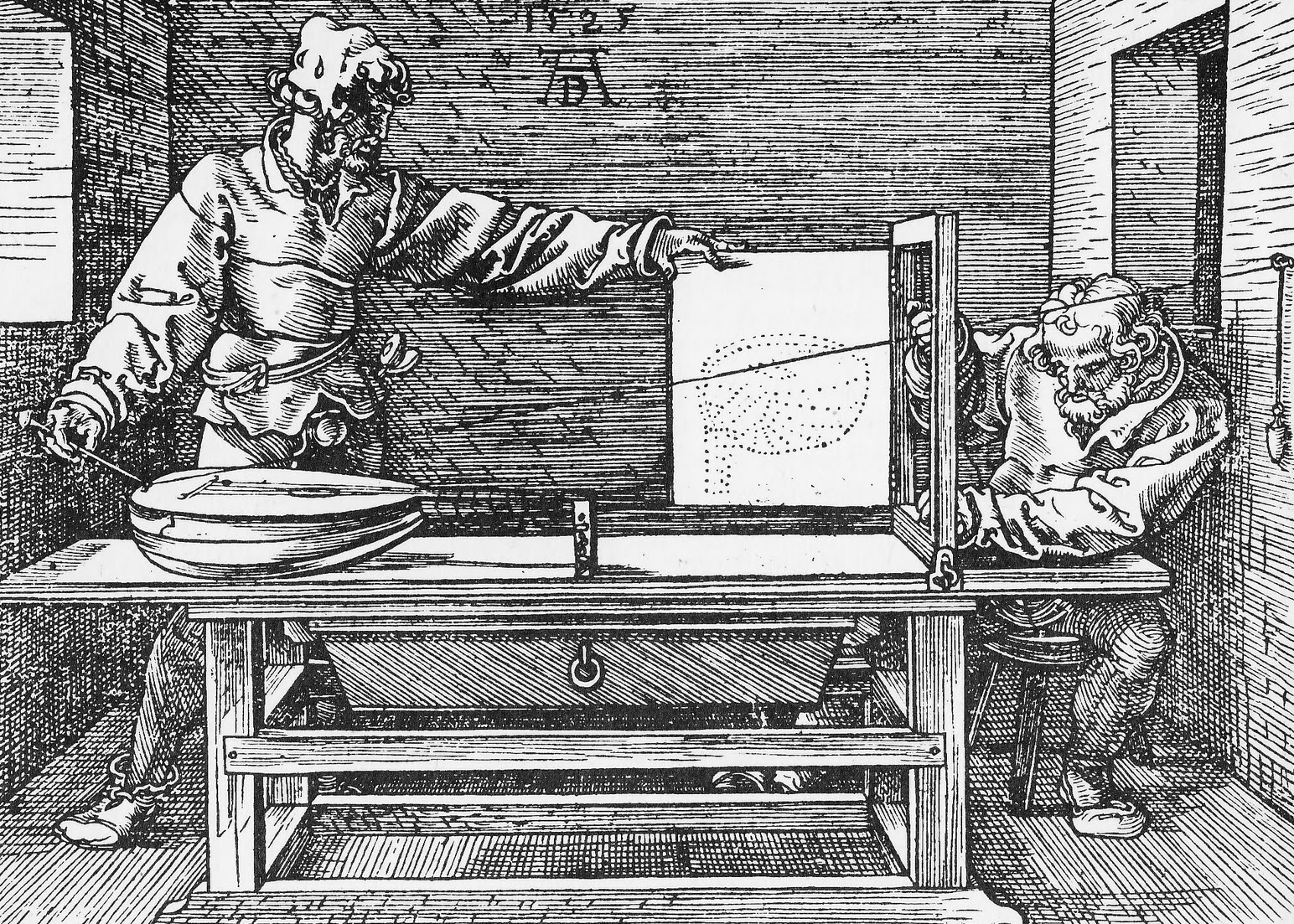

Perceptual computing is changing human–computer interaction. It’s been a while since there has been any disruption in the way we interface with traditional computers. The Intel Perceptual Computing Challenge wants your apps to change that.

We’re looking for the best of the best. Check your semicolons and really think about how you can change the world of computing. You can register and submit your ideas until June 17, 2013 when the first phase of the competition ends.

Important Dates

- Idea Submission /

Until 17 June, 2013Until 1 July, 2013 (Extended) - Judging & Loaner Camera Fulfilment / 2 July 2013 – 9 July 2013 (Updated)

- Early Profile Form Submission / 10 July 2013 – 31 July 2013 (Updated)

- Early Demo App Submission / 10 July 2013 – 20 August 2013 (Updated)

- Final Demo App Submission / 10 July 2013 – 26 August 2013 (Updated)

First Stage – Deadline 17 June, 2013 1 July, 2013 (Extended)

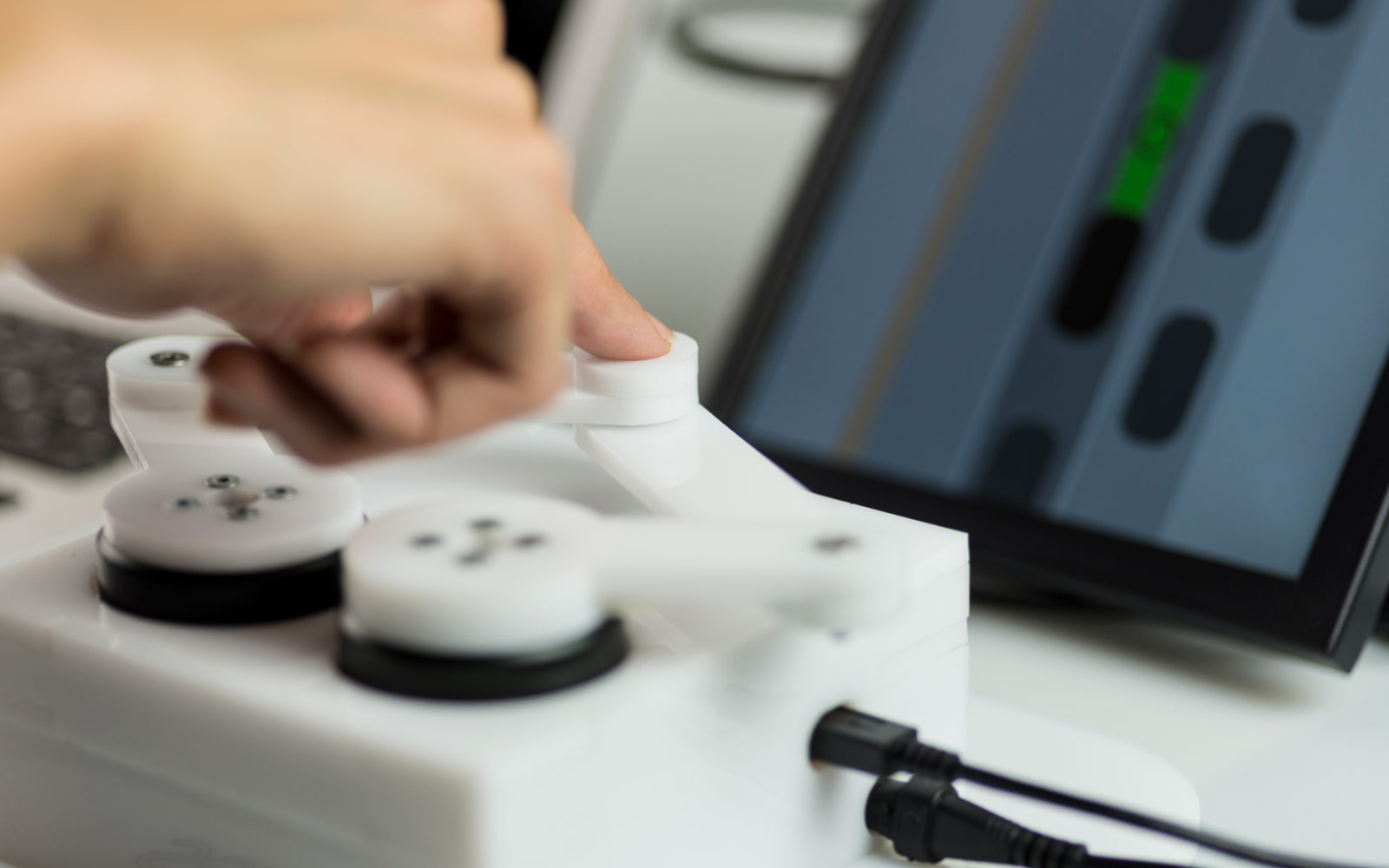

This software development competition is seeking innovative ideas for a software application (“App”) using Intel’s new programming Software Development Kit (“SDK”) which can be used with a web camera that is able to perceive gestures, voice and touch (on screen). The idea must fit one (1) of the following categories:

- Gaming

- Productivity

- Creative User Interface

- Open Innovation

No code submission necessary at this stage but idea submission is mandatory to proceed to the next stage and receive one of the 750 Creative* Interactive Gesture Camera Developer Kits.

The SDK (Second Stage)

Intel’s Perceptual Computing SDK is a cocktail of algorithms which can be compared with the Microsoft Kinect SDK, offering high level features such as finger tracking, facial feature tracking and voice recognition. This sits alongside a fairly comprehensive set of low level ‘data getter’ functions for its time of flight camera hardware.

The data from the time of flight sensor comes in pretty noisy in a way that differs from the kinect (imagine having some white noise applied to your depth stream). Intel supplies a very competent routine for cleaning it up, allowing for realtime noise-less depth data with smooth continuous surfaces. Unlike the Kinect, much finer details can be detected as each pixel acts independently of all others.

The data from the time of flight sensor comes in pretty noisy in a way that differs from the kinect (imagine having some white noise applied to your depth stream). Intel supplies a very competent routine for cleaning it up, allowing for realtime noise-less depth data with smooth continuous surfaces. Unlike the Kinect, much finer details can be detected as each pixel acts independently of all others.

In an interesting development, Intel doesn’t prioritise listing support for ‘C++, .NET, etc’, instead reeling off a set of creative coding platforms as its primary platforms : Processing, openFrameworks and Unity being the lucky selection. Under the cover, this directly translates to Java, C++ and C#/.NET support. Despite the focus on creative coding platforms, the framework currently only supports Windows.

The ‘openFrameworks support’ is a single example directly calling the computer-scientist-riffing low level Intel API, which in our opinion, demonstrates pretty shallow support for openFrameworks, but also means that you’ll be equally well placed if you’re coming from Cinder or C++ otherwise. There are some friendlier / more complete openFrameworks addons popping up on GitHub already which wrap up some features nicely, but are quite far off from wrapping the complete Intel offering of functionality.

The SDK ships with a comprehensive set of examples written in C++, demonstrating features such as head tracked VR, gesture remote control and an augmented reality farm.

Events

Intel is organising a number of events around the world to support the competition. From workshop to Hackathons, join others to develop and create!

- United States / Santa Clara / Workshop / June 13th / 6-9pm

- United States / San Francisco / Hackathon / June 21st/22nd / 7pm-10pm

- Germany / Munich / Workshop / June 8th / 2-9pm

- Germany / Munich / Hackfestival / June 22nd/23rd / 1pm-3pm (CAN will be present)

Prizes

This is your chance to be one of the Perceptual Computing leaders. Win part of the $1 Million prize pool and get your apps on next generation devices. Check out these prizes:

Grand Prize: $100,000 USD + $50,000 in Marketing and Development Consultations

For the ultimate Perceptual Computing Application Demo!

For each category:

- One (1) First Place Prize of $75,000 + $12,500 in Marketing and Development Consultations each

- Three (3) Second Place Prizes of $10,000 each

- Twenty (20) Third Place Prizes of $5,000 each

Plus even more opportunities to get your app to market and thousands of dollars for finalists who submit early!

CAN Team

Together with Intel team, CAN in participating in the judging of entries. Your team includes:

Filip Visnjic

Architect, educator, curator and a new media technologist from London. He is the founder and editor-in-chief at CreativeApplications.Net, co-founder and curator of Resonate festival and editorial director at HOLO magazine.

Elliot Woods

Digital media artist, technologist, curator and educator from Manchester UK. Elliot creates provocations towards future interactions between humans and socio-visual design technologies (principally projectors, cameras and graphical computation). He is the co-founder of Kimchi and Chips and also a contributor to the openFrameworks project (a ubiquitous toolkit for creative coding), and an open source contributor to the VVVV platform.

CAN Contest

To kick off the competition we are giving away THREE (3) Creative* Interactive Gesture Camera Developer Kits. All you have to do to win is leave a comment below. We ask you – “How would you change human–computer interaction”. Go wild, go crazy, speculate!

This contest is now closed. Winners:

The biggest barrier to change in human-computer interaction is our preconceived notions and actions about how we have interacted with machines in the past. That said, instead of deciding by analogy we have make decisions based on logic. As we get older we come to expect an interaction that what was done in the past. This is analogy-based decision making. To get past this barrier I would work closely with children (I have a 4 y.o. and 6 y.o.) as they interact with their environment based on almost pure logic. Children will be the ones that inherit all the interactions we invent today so they should naturally be part of the development.

I would like to change the human-computer interaction from computer-centric, controlling a mouse and keyboard to see the result on a screen, to human-centric, where we can manually manipulate actual objects and see how they change. Objects can be augmented, projecting on them for example, adding all the information and tools computers provide, but keeping the connection with the physical world.

I think the feedback is what’s lacking these days. I can’t say that I’m too excited for gestural recognition, because it seems to be a rather quaint improvement without adequate haptic feedback. So, I would pledge support towards research of devices that enable the machines to wave back at us. Or, better yet, push back. More specifically, modular controllers for multi-touch that could be assembled to a variety of different configurations would be ideal. This type of controller-assembly needs to sense touch, but also mechanize feedback. The ultimate goal of such controllers is to remove dependency on binary switches of non-pressure sensitive screens, increase the practical ranges of fine control, and enable control systems to evolve (change state), enabling fast access to various tools and their applications.

Rules and information

- Postage and Packing included.

- You must be over 18 years of age. There will be a total of THREE winner for this competition.

- Winners will be selected by best comment selected by CAN team.

- Winners will be contacted via email and will be asked to provide their full name and postal address. If they wish to pass on the book to another person, we will need their name and postal address. If the winner does not respond by the following Thursday (30th May) we will pick another winner.

Good Luck!