MySQL Parallel Replication: inventory, use-case and limitations

- 1. MySQL Parallel Replication: inventory, use-cases and limitations Jean-François Gagné (System Engineer) jeanfrancois DOT gagne AT booking.com Presented at Percona Live Amsterdam 2016

- 2. Booking.com ● Based in Amsterdam since 1996 ● Online Hotel and Accommodation (Travel) Agent (OTA): ● +1.040.000 properties in 226 countries ● +1.100.000 room nights reserved daily ● 42 languages (website and customer service) ● +13.000 people working in 184 offices worldwide ● Part of the Priceline Group ● And we use MySQL: ● Thousands (1000s) of servers, ~90% replicating ● >150 masters: ~30 >50 slaves & ~10 >100 slaves 2

- 3. Booking.com’ ● And we are hiring ! ● MySQL Engineer / DBA ● System Administrator ● System Engineer ● Site Reliability Engineer ● Developer / Designer ● Technical Team Lead ● Product Owner ● Data Scientist ● And many more… ● https://workingatbooking.com/ 3

- 4. Session Summary 1. Introducing Parallel Replication 2. MySQL 5.6: schema based MariaDB 10.0: out-of-order and in-order MariaDB 10.1: +optimistic MySQL 5.7: +logical clock 3. Benchmark Results from Booking.com 4

- 5. // Replication ● Relatively new because it is hard ● It is hard because of data consistency ● Running trx in // must give the same result on all slaves (= the master) ● Why is it important ? ● Computers have many Cores, using a single one for writes is a waste ● Some computer resources can give more throughput when used in parallel (example: RAID1 has 2 disks we can do 2 IOs in parallel) 5

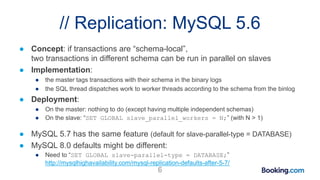

- 6. // Replication: MySQL 5.6 ● Concept: if transactions are “schema-local”, two transactions in different schema can be run in parallel on slaves ● Implementation: ● the master tags transactions with their schema in the binary logs ● the SQL thread dispatches work to worker threads according to the schema from the binlog ● Deployment: ● On the master: nothing to do (except having multiple independent schemas) ● On the slave: “SET GLOBAL slave_parallel_workers = N;” (with N > 1) ● MySQL 5.7 has the same feature (default for slave-parallel-type = DATABASE) ● MySQL 8.0 defaults might be different: ● Need to “SET GLOBAL slave-parallel-type = DATABASE;” http://mysqlhighavailability.com/mysql-replication-defaults-after-5-7/ 6

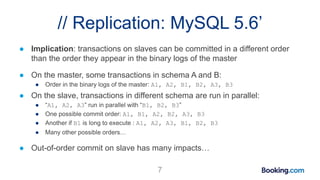

- 7. // Replication: MySQL 5.6’ ● Implication: transactions on slaves can be committed in a different order than the order they appear in the binary logs of the master ● On the master, some transactions in schema A and B: ● Order in the binary logs of the master: A1, A2, B1, B2, A3, B3 ● On the slave, transactions in different schema are run in parallel: ● “A1, A2, A3” run in parallel with “B1, B2, B3” ● One possible commit order: A1, B1, A2, B2, A3, B3 ● Another if B1 is long to execute : A1, A2, A3, B1, B2, B3 ● Many other possible orders… ● Out-of-order commit on slave has many impacts… 7

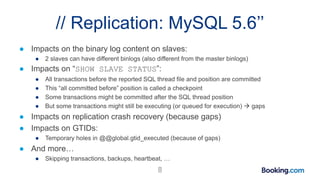

- 8. // Replication: MySQL 5.6’’ ● Impacts on the binary log content on slaves: ● 2 slaves can have different binlogs (also different from the master binlogs) ● Impacts on “SHOW SLAVE STATUS”: ● All transactions before the reported SQL thread file and position are committed ● This “all committed before” position is called a checkpoint ● Some transactions might be committed after the SQL thread position ● But some transactions might still be executing (or queued for execution) gaps ● Impacts on replication crash recovery (because gaps) ● Impacts on GTIDs: ● Temporary holes in @@global.gtid_executed (because of gaps) ● And more… ● Skipping transactions, backups, heartbeat, … 8

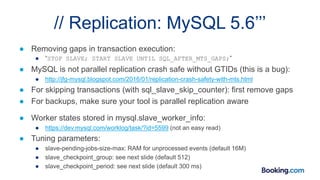

- 9. // Replication: MySQL 5.6’’’ ● Removing gaps in transaction execution: ● “STOP SLAVE; START SLAVE UNTIL SQL_AFTER_MTS_GAPS;” ● MySQL is not parallel replication crash safe without GTIDs (this is a bug): ● http://jfg-mysql.blogspot.com/2016/01/replication-crash-safety-with-mts.html ● For skipping transactions (with sql_slave_skip_counter): first remove gaps ● For backups, make sure your tool is parallel replication aware ● Worker states stored in mysql.slave_worker_info: ● https://dev.mysql.com/worklog/task/?id=5599 (not an easy read) ● Tuning parameters: ● slave-pending-jobs-size-max: RAM for unprocessed events (default 16M) ● slave_checkpoint_group: see next slide (default 512) ● slave_checkpoint_period: see next slide (default 300 ms)

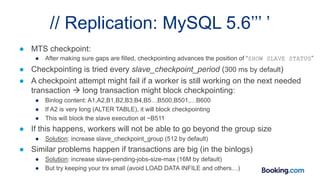

- 10. // Replication: MySQL 5.6’’’ ’ ● MTS checkpoint: ● After making sure gaps are filled, checkpointing advances the position of “SHOW SLAVE STATUS” ● Checkpointing is tried every slave_checkpoint_period (300 ms by default) ● A checkpoint attempt might fail if a worker is still working on the next needed transaction long transaction might block checkpointing: ● Binlog content: A1,A2,B1,B2,B3,B4,B5…B500,B501,…B600 ● If A2 is very long (ALTER TABLE), it will block checkpointing ● This will block the slave execution at ~B511 ● If this happens, workers will not be able to go beyond the group size ● Solution: increase slave_checkpoint_group (512 by default) ● Similar problems happen if transactions are big (in the binlogs) ● Solution: increase slave-pending-jobs-size-max (16M by default) ● But try keeping your trx small (avoid LOAD DATA INFILE and others…)

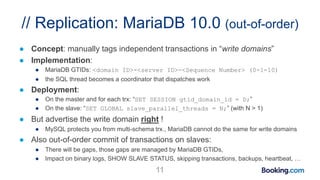

- 11. // Replication: MariaDB 10.0 (out-of-order) ● Concept: manually tags independent transactions in “write domains” ● Implementation: ● MariaDB GTIDs: <domain ID>-<server ID>-<Sequence Number> (0-1-10) ● the SQL thread becomes a coordinator that dispatches work ● Deployment: ● On the master and for each trx: “SET SESSION gtid_domain_id = D;” ● On the slave: “SET GLOBAL slave_parallel_threads = N;” (with N > 1) ● But advertise the write domain right ! ● MySQL protects you from multi-schema trx., MariaDB cannot do the same for write domains ● Also out-of-order commit of transactions on slaves: ● There will be gaps, those gaps are managed by MariaDB GTIDs, ● Impact on binary logs, SHOW SLAVE STATUS, skipping transactions, backups, heartbeat, … 11

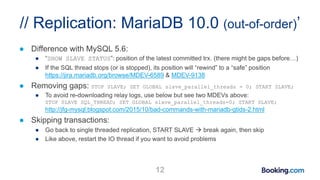

- 12. // Replication: MariaDB 10.0 (out-of-order)’ ● Difference with MySQL 5.6: ● “SHOW SLAVE STATUS”: position of the latest committed trx. (there might be gaps before…) ● If the SQL thread stops (or is stopped), its position will “rewind” to a “safe” position https://jira.mariadb.org/browse/MDEV-6589 & MDEV-9138 ● Removing gaps: STOP SLAVE; SET GLOBAL slave_parallel_threads = 0; START SLAVE; ● To avoid re-downloading relay logs, use below but see two MDEVs above: STOP SLAVE SQL_THREAD; SET GLOBAL slave_parallel_threads=0; START SLAVE; http://jfg-mysql.blogspot.com/2015/10/bad-commands-with-mariadb-gtids-2.html ● Skipping transactions: ● Go back to single threaded replication, START SLAVE break again, then skip ● Like above, restart the IO thread if you want to avoid problems 12

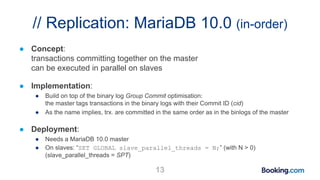

- 13. // Replication: MariaDB 10.0 (in-order) ● Concept: transactions committing together on the master can be executed in parallel on slaves ● Implementation: ● Build on top of the binary log Group Commit optimisation: the master tags transactions in the binary logs with their Commit ID (cid) ● As the name implies, trx. are committed in the same order as in the binlogs of the master ● Deployment: ● Needs a MariaDB 10.0 master ● On slaves: “SET GLOBAL slave_parallel_threads = N;” (with N > 0) (slave_parallel_threads = SPT) 13

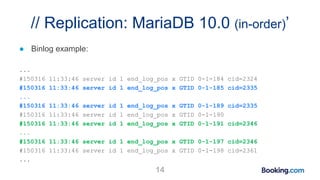

- 14. // Replication: MariaDB 10.0 (in-order)’ ● Binlog example: ... #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-184 cid=2324 #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-185 cid=2335 ... #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-189 cid=2335 #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-190 #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-191 cid=2346 ... #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-197 cid=2346 #150316 11:33:46 server id 1 end_log_pos x GTID 0-1-198 cid=2361 ... 14

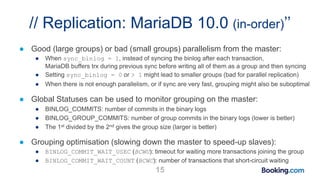

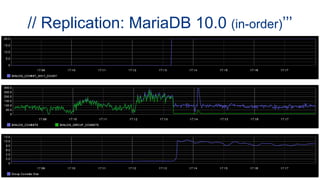

- 15. // Replication: MariaDB 10.0 (in-order)’’ ● Good (large groups) or bad (small groups) parallelism from the master: ● When sync_binlog = 1, instead of syncing the binlog after each transaction, MariaDB buffers trx during previous sync before writing all of them as a group and then syncing ● Setting sync_binlog = 0 or > 1 might lead to smaller groups (bad for parallel replication) ● When there is not enough parallelism, or if sync are very fast, grouping might also be suboptimal ● Global Statuses can be used to monitor grouping on the master: ● BINLOG_COMMITS: number of commits in the binary logs ● BINLOG_GROUP_COMMITS: number of group commits in the binary logs (lower is better) ● The 1st divided by the 2nd gives the group size (larger is better) ● Grouping optimisation (slowing down the master to speed-up slaves): ● BINLOG_COMMIT_WAIT_USEC (BCWU): timeout for waiting more transactions joining the group ● BINLOG_COMMIT_WAIT_COUNT (BCWC): number of transactions that short-circuit waiting 15

- 16. // Replication: MariaDB 10.0 (in-order)’’’

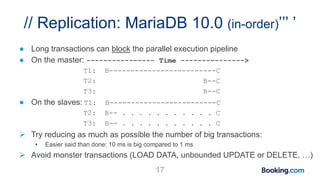

- 17. // Replication: MariaDB 10.0 (in-order)’’’ ’ ● Long transactions can block the parallel execution pipeline ● On the master: ---------------- Time ---------------> T1: B-------------------------C T2: B--C T3: B--C ● On the slaves: T1: B-------------------------C T2: B-- . . . . . . . . . . . C T3: B-- . . . . . . . . . . . C Try reducing as much as possible the number of big transactions: • Easier said than done: 10 ms is big compared to 1 ms Avoid monster transactions (LOAD DATA, unbounded UPDATE or DELETE, …) 17

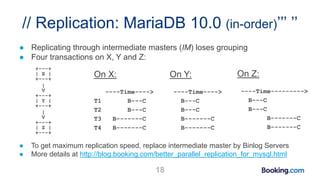

- 18. // Replication: MariaDB 10.0 (in-order)’’’ ’’ ● Replicating through intermediate masters (IM) loses grouping ● Four transactions on X, Y and Z: +---+ | X | +---+ | V +---+ | Y | +---+ | V +---+ | Z | +---+ ● To get maximum replication speed, replace intermediate master by Binlog Servers ● More details at http://blog.booking.com/better_parallel_replication_for_mysql.html 18 On Y: ----Time----> B---C B---C B-------C B-------C On Z: ----Time---------> B---C B---C B-------C B-------C On X: ----Time----> T1 B---C T2 B---C T3 B-------C T4 B-------C

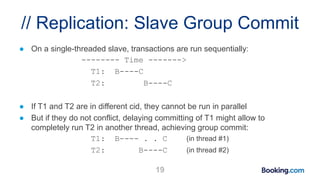

- 19. // Replication: Slave Group Commit ● On a single-threaded slave, transactions are run sequentially: -------- Time -------> T1: B----C T2: B----C ● If T1 and T2 are in different cid, they cannot be run in parallel ● But if they do not conflict, delaying committing of T1 might allow to completely run T2 in another thread, achieving group commit: T1: B---- . . C (in thread #1) T2: B----C (in thread #2) 19

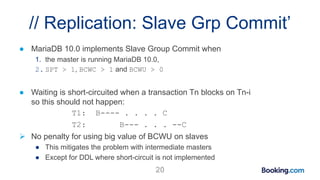

- 20. // Replication: Slave Grp Commit’ ● MariaDB 10.0 implements Slave Group Commit when 1. the master is running MariaDB 10.0, 2. SPT > 1, BCWC > 1 and BCWU > 0 ● Waiting is short-circuited when a transaction Tn blocks on Tn-i so this should not happen: T1: B---- . . . . C T2: B--- . . . --C No penalty for using big value of BCWU on slaves ● This mitigates the problem with intermediate masters ● Except for DDL where short-circuit is not implemented 20

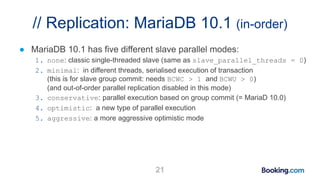

- 21. // Replication: MariaDB 10.1 (in-order) ● MariaDB 10.1 has five different slave parallel modes: 1. none: classic single-threaded slave (same as slave_parallel_threads = 0) 2. minimal: in different threads, serialised execution of transaction (this is for slave group commit: needs BCWC > 1 and BCWU > 0) (and out-of-order parallel replication disabled in this mode) 3. conservative: parallel execution based on group commit (= MariaD 10.0) 4. optimistic: a new type of parallel execution 5. aggressive: a more aggressive optimistic mode 21

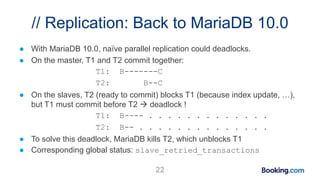

- 22. // Replication: Back to MariaDB 10.0 ● With MariaDB 10.0, naïve parallel replication could deadlocks. ● On the master, T1 and T2 commit together: T1: B-------C T2: B--C ● On the slaves, T2 (ready to commit) blocks T1 (because index update, …), but T1 must commit before T2 deadlock ! T1: B---- . . . . . . . . . . . . . T2: B-- . . . . . . . . . . . . . . ● To solve this deadlock, MariaDB kills T2, which unblocks T1 ● Corresponding global status: slave_retried_transactions 22

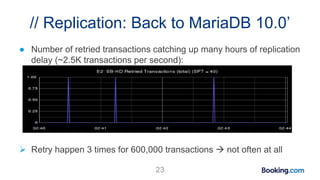

- 23. // Replication: Back to MariaDB 10.0’ ● Number of retried transactions catching up many hours of replication delay (~2.5K transactions per second): Retry happen 3 times for 600,000 transactions not often at all 23

- 24. // Replication: MariaDB 10.1 (optimistic) ● Concept: run all transactions in parallel, if they conflict (replication blocked because in-order commit), deadlock detection unblocks the slave ● Deployment: ● Needs a MariaDB 10.1 master ● Assume same table transactional property on master and slave (could produce corrupted results if the master is InnoDB and slave MyISAM) ● SET GLOBAL slave_parallel_thread = N; (with N > 1) ● SET GLOBAL slave_parallel_mode = {optimistic | aggressive}; Optimistic will try to reduce the number of deadlocks (and rollbacks) using information put in the binary logs from the master, aggressive will run as many transactions in parallel as possible (bounded by the number of threads) ● DDLs cannot be rollbacks they cannot be replicated optimistically: DDL blocks the parallel replication pipeline (and same for other non-transactional operations) 24

- 25. // Replication: MySQL 5.7 ● MySQL 5.7 has different slave parallel types: ● DATABASE: the schema based parallel replication from MySQL 5.6 ● LOGICAL_CLOCK: “Transactions that are part of the same binary log group commit on a master are applied in parallel on a slave.” (from the documentation) (the logical clock is implemented using intervals) ● Slowing down the master to speedup the slave: ● binlog_group_commit_sync_delay ● binlog_group_commit_sync_no_delay_count ● We can expect the same problems as with MariaDB 10.0: ● Problems with long/big transactions ● Problems with intermediate masters 25

- 26. // Replication: MySQL 5.7’ ● By default, MySQL 5.7 in logical clock does out-of-order commit: There will be gaps (“START SLAVE UNTIL SQL_AFTER_MTS_GAPS;”) ● Not replication crash safe without GTIDs http://jfg-mysql.blogspot.co.uk/2016/01/replication-crash-safety-with-mts.html ● And also everything else: binary logs content, SHOW SLAVE STATUS, skipping transactions, backups, … ● Using slave_preserve_commit_order = 1 does what you expect ● This configuration does not generate gap ● But it needs log-slave-updates, there is a feature request to remove this limitation: https://bugs.mysql.com/bug.php?id=75396 ● And it is still not replication crash safe (surprising because no gap): https://bugs.mysql.com/bug.php?id=80103 26

- 27. // Replication: results from B.com ● MariaDB 10.0 tests: ● On four environments, from a MySQL 5.6 masters, thanks to Slave Group Commit ● MariaDB 10.1 tests: conservative vs aggressive ● Same environments and transactions: how much better (or worse) will aggressive be ? ● MySQL 5.6 real deployment ● MariaDB 10.0 an 10.1 real deployment ● No results from MySQL 5.7: ● I guess we can expect similar results to MariaDB 10.0 27

- 28. // Replication: MariaDB 10.0 28 ● Four environments (E1, E2, E3 and E4): ● A is a MySQL 5.6 master ● B is a MariaDB 10.0 intermediate master ● C is a MariaDB 10.0 intermediate master doing slave group commit ● D is using the group commit information from C to run transaction in parallel +---+ +---+ +---+ +---+ | A | --> | B | --> | C | --> | D | +---+ +---+ +---+ +---+ ● Note that slave group commit generates smaller group than a group committing master, more information in: http://blog.booking.com/evaluating_mysql_parallel_replication_3-under_the_hood.html#group_commit_slave_vs_master

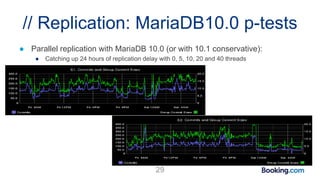

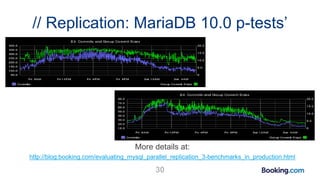

- 29. // Replication: MariaDB10.0 p-tests 29 ● Parallel replication with MariaDB 10.0 (or with 10.1 conservative): ● Catching up 24 hours of replication delay with 0, 5, 10, 20 and 40 threads

- 30. // Replication: MariaDB 10.0 p-tests’ 30 More details at: http://blog.booking.com/evaluating_mysql_parallel_replication_3-benchmarks_in_production.html

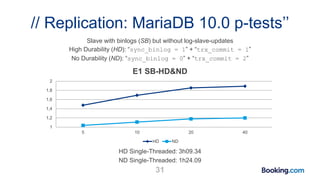

- 31. // Replication: MariaDB 10.0 p-tests’’ 31 HD Single-Threaded: 3h09.34 ND Single-Threaded: 1h24.09 Slave with binlogs (SB) but without log-slave-updates High Durability (HD): “sync_binlog = 1” + “trx_commit = 1” No Durability (ND): “sync_binlog = 0” + “trx_commit = 2” 1 1,2 1,4 1,6 1,8 2 5 10 20 40 E1 SB-HD&ND HD ND

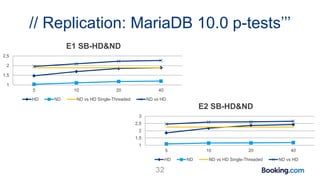

- 32. // Replication: MariaDB 10.0 p-tests’’’ 32 1 1,5 2 2,5 5 10 20 40 E1 SB-HD&ND HD ND ND vs HD Single-Threaded ND vs HD 1 1,5 2 2,5 3 5 10 20 40 E2 SB-HD&ND HD ND ND vs HD Single-Threaded ND vs HD

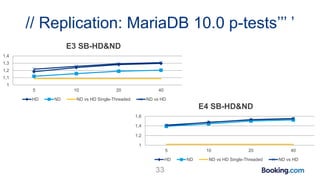

- 33. // Replication: MariaDB 10.0 p-tests’’’ ’ 33 1 1,1 1,2 1,3 1,4 5 10 20 40 E3 SB-HD&ND HD ND ND vs HD Single-Threaded ND vs HD 1 1,2 1,4 1,6 5 10 20 40 E4 SB-HD&ND HD ND ND vs HD Single-Threaded ND vs HD

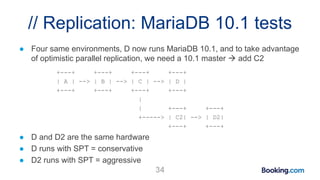

- 34. // Replication: MariaDB 10.1 tests 34 ● Four same environments, D now runs MariaDB 10.1, and to take advantage of optimistic parallel replication, we need a 10.1 master add C2 +---+ +---+ +---+ +---+ | A | --> | B | --> | C | --> | D | +---+ +---+ +---+ +---+ | | +---+ +---+ +-----> | C2| --> | D2| +---+ +---+ ● D and D2 are the same hardware ● D runs with SPT = conservative ● D2 runs with SPT = aggressive

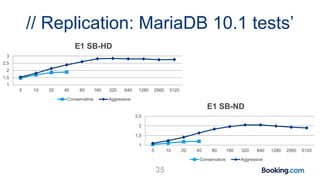

- 35. // Replication: MariaDB 10.1 tests’ 35 1 1,5 2 2,5 3 5 10 20 40 80 160 320 640 1280 2560 5120 E1 SB-HD Conservative Aggressive 1 1,5 2 2,5 5 10 20 40 80 160 320 640 1280 2560 5120 E1 SB-ND Conservative Aggressive

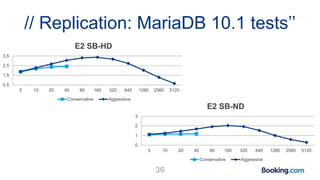

- 36. // Replication: MariaDB 10.1 tests’’ 36 0,5 1,5 2,5 3,5 5 10 20 40 80 160 320 640 1280 2560 5120 E2 SB-HD Conservative Aggressive 0 1 2 3 5 10 20 40 80 160 320 640 1280 2560 5120 E2 SB-ND Conservative Aggressive

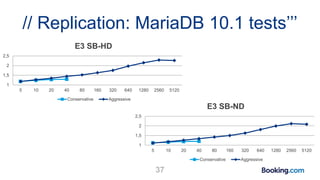

- 37. // Replication: MariaDB 10.1 tests’’’ 37 1 1,5 2 2,5 5 10 20 40 80 160 320 640 1280 2560 5120 E3 SB-HD Conservative Aggressive 1 1,5 2 2,5 5 10 20 40 80 160 320 640 1280 2560 5120 E3 SB-ND Conservative Aggressive

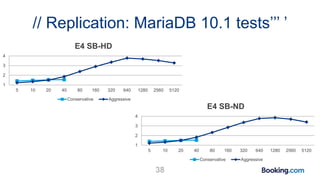

- 38. // Replication: MariaDB 10.1 tests’’’ ’ 38 1 2 3 4 5 10 20 40 80 160 320 640 1280 2560 5120 E4 SB-HD Conservative Aggressive 1 2 3 4 5 10 20 40 80 160 320 640 1280 2560 5120 E4 SB-ND Conservative Aggressive

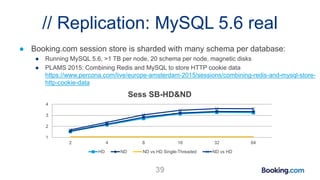

- 39. // Replication: MySQL 5.6 real ● Booking.com session store is sharded with many schema per database: ● Running MySQL 5.6, >1 TB per node, 20 schema per node, magnetic disks ● PLAMS 2015: Combining Redis and MySQL to store HTTP cookie data https://www.percona.com/live/europe-amsterdam-2015/sessions/combining-redis-and-mysql-store- http-cookie-data 39 1 2 3 4 2 4 8 16 32 64 Sess SB-HD&ND HD ND ND vs HD Single-Threaded ND vs HD

- 40. // Replication: MariaDB 10.0 real ● Booking.com is also running MariaDB 10.0. ● We optimised group commit and enabled parallel replication: set global BINLOG_COMMIT_WAIT_COUNT = 75; set global BINLOG_COMMIT_WAIT_USEC = 300000; (300 milliseconds) set global SLAVE_PARALLEL_THREADS = 80; ● And we tuned the application a lot: ● Break big transaction in many small transactions ● Increase the number of concurrent sessions to the database 40

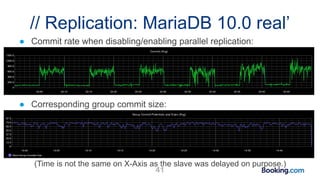

- 41. // Replication: MariaDB 10.0 real’ 41 ● Commit rate when disabling/enabling parallel replication: ● Corresponding group commit size: (Time is not the same on X-Axis as the slave was delayed on purpose.)

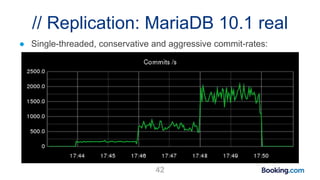

- 42. // Replication: MariaDB 10.1 real 42 ● Single-threaded, conservative and aggressive commit-rates:

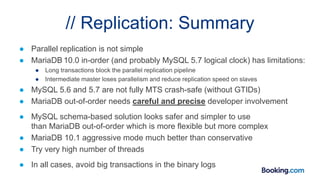

- 43. // Replication: Summary ● Parallel replication is not simple ● MariaDB 10.0 in-order (and probably MySQL 5.7 logical clock) has limitations: ● Long transactions block the parallel replication pipeline ● Intermediate master loses parallelism and reduce replication speed on slaves ● MySQL 5.6 and 5.7 are not fully MTS crash-safe (without GTIDs) ● MariaDB out-of-order needs careful and precise developer involvement ● MySQL schema-based solution looks safer and simpler to use than MariaDB out-of-order which is more flexible but more complex ● MariaDB 10.1 aggressive mode much better than conservative ● Try very high number of threads ● In all cases, avoid big transactions in the binary logs

- 44. And please test by yourself and share results

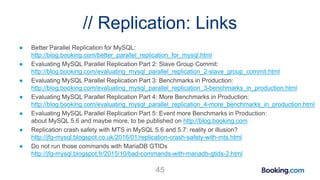

- 45. // Replication: Links ● Better Parallel Replication for MySQL: http://blog.booking.com/better_parallel_replication_for_mysql.html ● Evaluating MySQL Parallel Replication Part 2: Slave Group Commit: http://blog.booking.com/evaluating_mysql_parallel_replication_2-slave_group_commit.html ● Evaluating MySQL Parallel Replication Part 3: Benchmarks in Production: http://blog.booking.com/evaluating_mysql_parallel_replication_3-benchmarks_in_production.html ● Evaluating MySQL Parallel Replication Part 4: More Benchmarks in Production: http://blog.booking.com/evaluating_mysql_parallel_replication_4-more_benchmarks_in_production.html ● Evaluating MySQL Parallel Replication Part 5: Event more Benchmarks in Production: about MySQL 5.6 and maybe more, to be published on http://blog.booking.com ● Replication crash safety with MTS in MySQL 5.6 and 5.7: reality or illusion? http://jfg-mysql.blogspot.co.uk/2016/01/replication-crash-safety-with-mts.html ● Do not run those commands with MariaDB GTIDs http://jfg-mysql.blogspot.fr/2015/10/bad-commands-with-mariadb-gtids-2.html 45

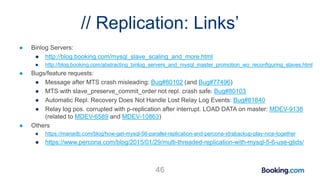

- 46. // Replication: Links’ ● Binlog Servers: ● http://blog.booking.com/mysql_slave_scaling_and_more.html ● http://blog.booking.com/abstracting_binlog_servers_and_mysql_master_promotion_wo_reconfiguring_slaves.html ● Bugs/feature requests: ● Message after MTS crash misleading: Bug#80102 (and Bug#77496) ● MTS with slave_preserve_commit_order not repl. crash safe: Bug#80103 ● Automatic Repl. Recovery Does Not Handle Lost Relay Log Events: Bug#81840 ● Relay log pos. corrupted with p-replication after interrupt. LOAD DATA on master: MDEV-9138 (related to MDEV-6589 and MDEV-10863) ● Others ● https://mariadb.com/blog/how-get-mysql-56-parallel-replication-and-percona-xtrabackup-play-nice-together ● https://www.percona.com/blog/2015/01/29/multi-threaded-replication-with-mysql-5-6-use-gtids/ 46

- 47. Thanks Jean-François Gagné jeanfrancois DOT gagne AT booking.com